Faculty Mentor:

Dr. Latika Kharb

Student Name:

Sarabjit Kaur (MCA – II)

Pragya Sharma (MCA – II)

1. INTRODUCTION

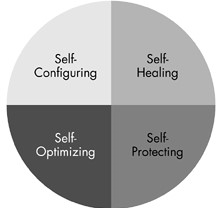

Autonomic Computing (also known as AC) is a computing model which becomes self-configuring, self-healing, self-optimizing & self-protecting (self-CHOP). It was named after & patterned on the human body’s autonomic nervous system, initiated by IBM in 2001. Its aim is to cope with the growing & ubiquitous complexity in system management.

2. Properties of Autonomic Computing

Fig 1: Properties of Autonomic Computing

1. Self-configuration

It tells what is desired, not how to accomplish it.

2. Self-healing

It detects and diagnoses the problem automatically, and if possible, tries to fix the problem. An autonomic system is said to be reactive to failure or early signs of a possible failure. Fault-tolerance is an important aspect of self-healing.

3. Self-optimization

It can change behaviour according to its needs to improve performance.

4. Self-protection

It prevents itself from malicious attack & from end user who inadvertently makes changes in software. The system itself achieve security, privacy and data protection. Security is an important aspect of self-protection.

3. Architecture of Autonomic Computing

In this, we tell how ACS’s can built. We will describe the autonomic elements and software tools.

3.1 Autonomic Element Architecture

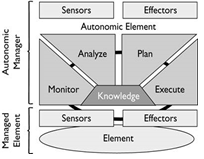

AE’s are the basic building blocks of autonomic system. The architecture shown in fig. 2 is IBM autonomic ‘blueprint’.

Fig 2. Architecture of Autonomic Element

Autonomic Element has 2 parts:

1. Managed Element (ME)

2. Autonomic Manager (AM)

ACS’s are established from Managed Element (ME) whose behaviours are controlled by Autonomic Manager (AM).

3.1.1 Managed Element (ME)

It is a component from a system and can be a hardware, application software or a system. Each ME includes sensors an effectors. Sensor sends and retrieves the information about the current state, and then compares it with the expected output by autonomic element. The required action is executed by effectors.

3.1.2 Autonomic Manager (AM)

It uses manageable interface to monitor and control the Managed Element. It has 4 parts: monitor, analyze, plan and execute. Monitor provides mechanism to collect information from ME, monitor it and manage it. Monitored part is analyzed. It helps to predict future status. Plan part assigns the task and resources based on the policies. Policies are the administrator comments. Finally, the execution part is executed. It controls the execution of a plan and dispatches the recommended action into ME.

There are six components:

1. Transmit Service Component (TSC)

2. Heartbeat Monitoring Component (HMC)

3. Resource Discovery Component (RDC)

4. Service Management Component (SMC)

5. Hardware Utilization Monitoring Component (HUMC)

6. Decision-making Component (DMC)

3.2 Software Architecture

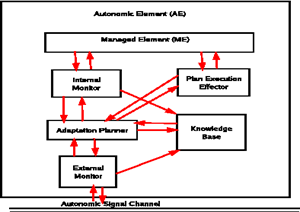

The activities in software is categorized into four groups: monitoring components, analyzing monitored data, creating a plan for adaption and repair, and executing the plan. McCann et al have identified two categories: Tightly-coupled autonomic system and Loosely-coupled autonomic system

3.2.1 Tightly-coupled autonomic system

They are build by using intelligent autonomic elements. The elements has autonomous behaviour and make decisions according to policies. Change in behaviour of each element creates instability because that element affects other element.

Fig 3. Architecture of Tightly-coupled autonomic system

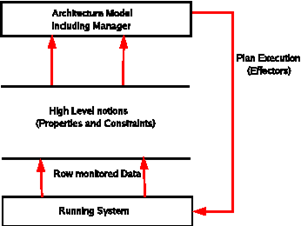

3.2.2 Loosely-coupled autonomic system

In this, the individual components are not autonomic but an autonomic infrastructure that is separated from running system which handles the autonomic behaviour. The autonomic infrastructure runs on another machine. This approach is an architecture model-based because autonomic infrastructure uses architecture model for designing the system. The running system is monitored, data is collected and mapped to the architecture model. The advantage of this system is that software adaptation can be plugged into pre-existing system. This approach is centralized.

Fig 4. Architecture of Loosely-coupled autonomic system

4. Need & Purpose

The move toward autonomic computing is driven by a desire for cost reduction and the need to lift the obstacles presented by computer system complexities to allow for more advanced computing technology.

Its purpose:

1. 1. Automatic

It means to control its internal functions & operations. As such, it must be self-contained & able to start-up without external help.

2. 2. Adaptive

It means to change its operations accordingly whether temporal or spatial change either in long term or short term.

3. 3. Aware

It means to sense its operational behavior in response to context or state changes.

5. Levels in Autonomic Computing

Autonomic computing is an evolutionary process & consists of 5 levels. These levels are:

1. Basic

It is the starting point. Each element of the system is managed by system administrator who set up, monitor and enhance it when needed.

2. Managed

At this level, system management technologies are used to collect information from various system and combined it into one.

3. Predictive

At this level, new technologies are introduced that provides the common relation between the elements of system. By this relation, system starts to recognize patterns, predict the optimal configuration and provide advice on what action the administrator should take. As these technologies improve, people become more comfortable.

4. Adaptive

At this level, system starts to take action automatically based on information that is available to them.

5. Autonomic

It is the final step. At this level, full automatic level is attained. The user interacts with the system to monitor the business process.

6. Benefits

The main benefit is to reduce TCO (Total Cost of Ownership). Breakdown will be less frequent and fewer personnel will be required to manage the system. Due to automation the deployment and maintenance decreased and stability increased.

6.1 Short-term IT related benefits include:

1. Simplified user experience through a more responsive, real-time system.

2. Full use of idle processing power, including home PC’s, through networked system.

3. High availability.

4. High security system.

5. Fewer system or network errors due to self-healing.

6.2 Long-term, Higher Order benefits include:

1. Embedding autonomic capabilities in client or access devices, servers, storage systems, middleware, and the network itself

2. Achieving end-to-end service level management.

3. Massive simulation - weather, medical - complex calculations like protein folding, which require processors to run 24/7 for as long as a year at a time.

7. Conclusion

Autonomic computing is an evolutionary process and it helps in embedding autonomic capabilities in client or access devices, servers, storage systems, middleware, and the network itself. Due to automation the deployment and maintenance decreased and stability increased.In this paper, we discussed the concept, its benefits and various purposes.

8. 8. References

[1] Menasce, Daniel A. etal.: Autonomic Virtualized Environments. In: ICAS ’06: Proceedings of the International Conference on Autonomic and Autonomous Systems. Washington, DC, USA : IEEE Computer Society, 2006.

[2] Kharb L. etal, Autonomic Computing Systems, The IUP Journal of Systems Management,2007.

[3] Kephart, J. O. ; Chess, D. M.: The Vision of Autonomic Computing. In: Computer 36 (2003)

[4] Anala M etal: Application of Autonomic Computing Principles In Virtualized Environment, DOI : 10.5121/csit.2012.2118. 2012.

[5] Vincent R: Applications of computer science in the life sciences, COMP102, McGill University, 2011.